Realtime semantic space exploration

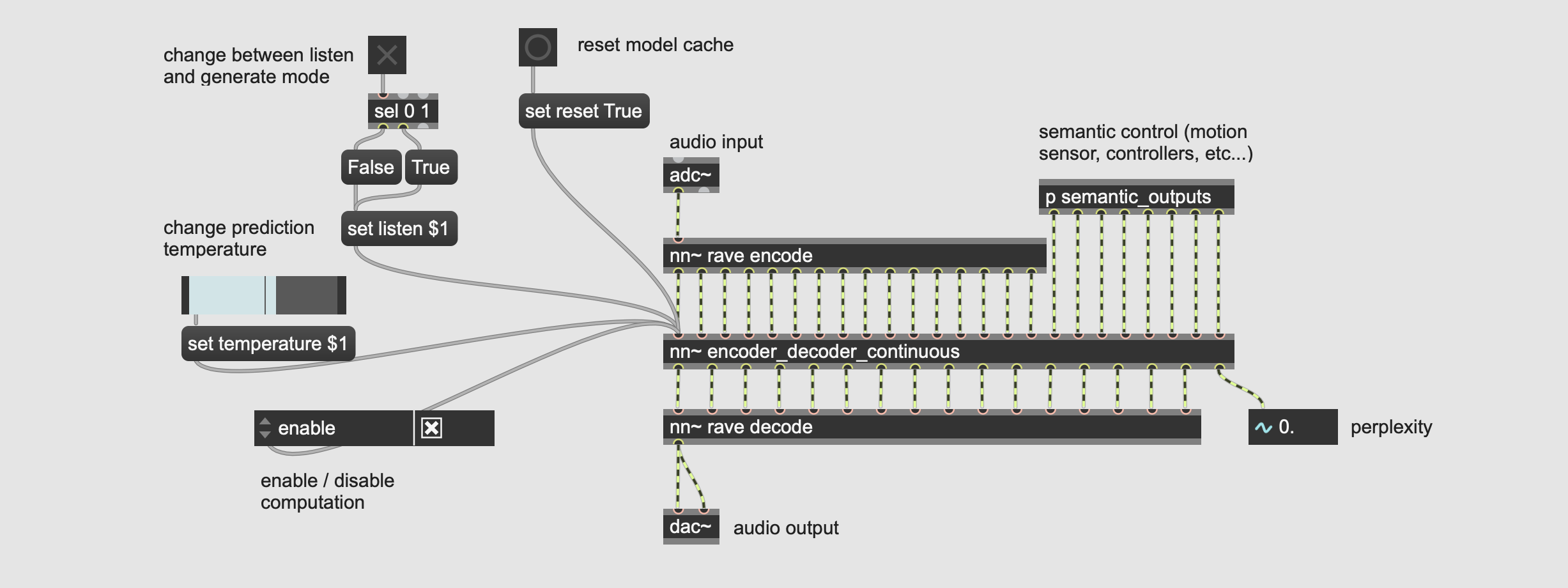

I have recently released the code for MSPrior, which can be used in conjunction with RAVE to perform conditional and unconditional generation in realtime, right inside Max/MSP and PureData using nn~.

A feature that is coming soon is the conditioning of the prior model on top of a semantic representation, extracted through the use of a self-supervised model. I have tried this setup on a dataset composed of 78rpm recordings denoised using the technique described in this article. Here are a few samples from the dataset, which total size is approximately 2 non-stop years of music.

| Samples from the dataset |

|---|

Combining this system with realtime hand tracking, we can effectively shape the sound using our bare hands, leading to an intuitive way to steer the generation.

Semantic exploration using real-time hand tracking, rave and msprior ! 🤚 The semantic space is much more structured than rave's latent space, and subtle movements can really make a difference 😁 https://t.co/cKP5UOd7jc pic.twitter.com/ZsCzgdfpRW

— Antoine Caillon (@antoine_caillon) May 16, 2023

Things are still really experimental at this point, but I’m planning on releasing everything by June 30 (self-supervised code, pretrained models).